views

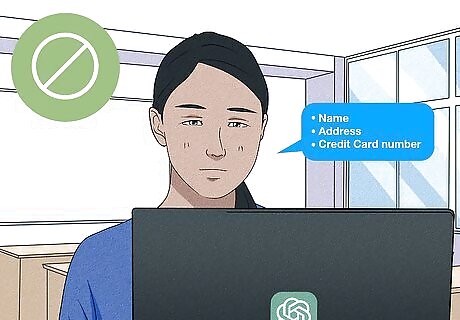

- ChatGPT is safe to use if you don't share private information.

- Your conversations with ChatGPT are not confidential and may be used to train future versions of the model.

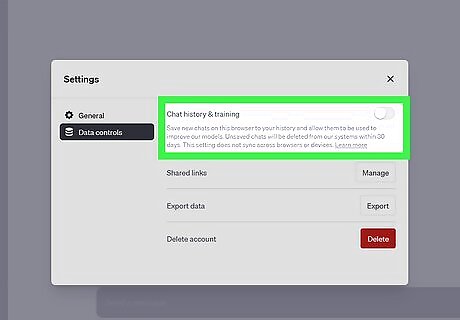

- You can opt out of training models with your data, but your chats will still be stored for 30 days to monitor for abuse.

Is ChatGPT safe?

ChatGPT is safe if you don't share sensitive data. Using ChatGPT to answer questions, generate content, code programs, and perform other common tasks is generally safe when you stay mindful of the information you share. OpenAI, the company behind ChatGPT, implements many security measures to help keep ChatGPT a safe and (mostly) accurate tool to use. Still, there are risks to using ChatGPT, and it's important to understand them fully before trusting the AI chatbot with your data.

ChatGPT Risks

Conversations are not 100% private. Any information you provide ChatGPT—in prompts, via file uploads, and through feedback—is stored on OpenAI's servers and visible to OpenAI's developers. Your conversations can be used to further train the language model by default—meaning that ChatGPT may "learn" from your private information or your company's confidential data and inadvertently share it with other users in the future. See How to Stay Safe with ChatGPT to learn how to opt out of training future LLMs with your data.

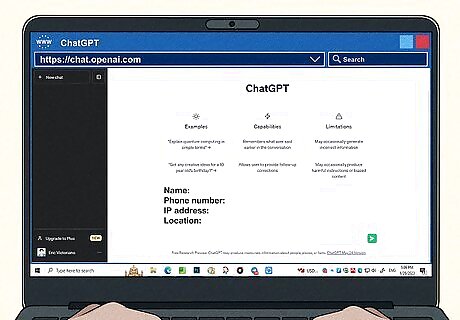

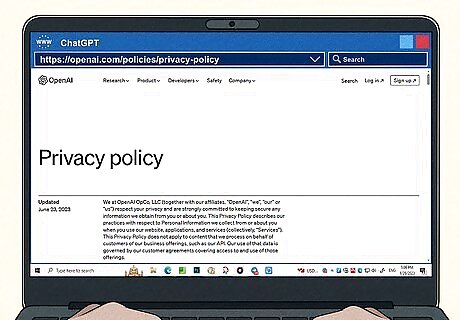

ChatGPT collects a lot of data about you. ChatGPT also stores other information about you, including your name, phone number, IP address, location, and any identifying information that can be obtained through cookies. According to ChatGPT's privacy policy, OpenAI will not sell your data to third parties. They do state that they may share your data with affiliates, vendors, service providers, and law enforcement. It's also possible their privacy policy will change in the future.

Responses may be biased or inaccurate. Because ChatGPT was trained on such a wide variety of data, it may generate incorrect answers and biased or offensive content. ChatGPT also cannot provide accurate citations, so there's no way to know if it's getting its information from an unreliable source. If you use ChatGPT for important research, you may receive inaccurate information.

ChatGPT can be used for malicious purposes. Because ChatGPT is so powerful, hackers can use the tool to create malware, generate content for phishing attacks and scams, and enact cyberattacks using data from the dark web. ChatGPT may also be used in conjunction with bots to create fake news articles and other inaccurate content meant to trick readers.

No security measures are 100% failsafe. While ChatGPT does take many precautions to protect your personal information and sensitive data, there's no guarantee that their servers and security won't be compromised. Secure services are often hacked, which can lead to sensitive information like passwords and contact information falling into the wrong hands.

Security Measures

OpenAI takes many precautions to keep ChatGPT safe. Even though there are risks to using ChatGPT, OpenAI shows their commitment to security and privacy in many ways: Compliance certifications: OpenAI was audited and deemed compliant by many data privacy organizations, including CCPA (California Consumer Privacy Act), GDPR (EU General Data Protection Regulation), and SOC 2/3. Content moderation: ChatGPT has built-in filters to keep it from being used for nefarious purposes. Conversations are monitored for abuse, which helps prevent scammers and hackers from getting much use out of the tool. Bug bounty program: OpenAI pays ethical hackers to probe for and identify vulnerabilities in ChatGPT. If a hacker finds a security bug, they'll receive a bug bounty award. Personal data protection: To avoid using confidential data to train future models, ChatGPT's developers attempt to remove personal information from its training datasets. Data security: All collected data is backed up, encrypted, stored in secure facilities, only accessible by approved staff. Reinforcement training: After the language model behind ChatGPT was initially trained on massive amounts of data from the internet, real human trainers fine-tuned the chatbot to remove misinformation, offensive language, and other errors. While errors may still pop up, this content moderation shows that OpenAI is serious about ChatGPT generating high-quality answers and content. Transparency: You can view ChatGPT's security measures in detail at https://trust.openai.com.

How to Stay Safe with ChatGPT

Read ChatGPT's privacy policy and terms of use thoroughly. Before giving any of your personal information to ChatGPT, it's best to read and understand their policies. Bookmark the policies so you can refer back to them often, as they can always change without warning. ChatGPT privacy policy: https://openai.com/policies/privacy-policy ChatGPT terms of use: https://openai.com/policies/terms-of-use

Disable chat history and model training. You can prevent your conversations with ChatGPT from being saved to your chat history and opt out of your data being used to train OpenAI's models. To opt out of model training, click the three dots at the bottom of ChatGPT, go to Settings > Data controls, and click the "Chat history & training" switch to turn it off. Even if you opt out, your chats will still be stored on OpenAI's servers and accessible to staff for 30 days to monitor for abuse.

Research ChatGPT's responses before accepting them as facts. ChatGPT can "hallucinate" facts and provide incorrect information. If you plan to use one of ChatGPT's responses for anything important, do your own research to back up ChatGPT's information with real citations.

Only access ChatGPT through its official web and iOS apps. To avoid supplying personal data to scammers or apps pretending to be ChatGPT, only use ChatGPT by visiting https://chat.openai.com or by downloading the official ChatGPT app for iPhone and iPad. As of now, there is no ChatGPT app for Android devices. Any apps claiming to be ChatGPT in the Play Store are not the real ChatGPT.

Don't install browser extensions and apps you don't trust. Some apps and browser extensions that claim to bring ChatGPT functionality to your browser may be harvesting your data. Always research extensions and apps before installing them on your computer, phone, or tablet.

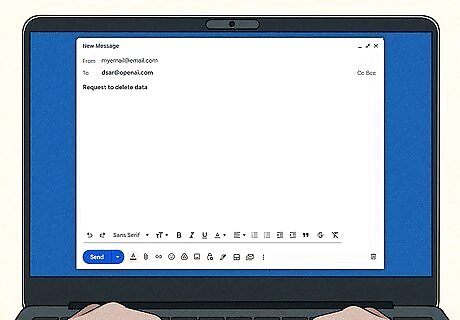

Request to delete your data. If you don't feel comfortable with ChatGPT's privacy and security measures, you can ask OpenAI to delete your ChatGPT account and data. You can email [email protected] to do so.

Comments

0 comment